Hierarchical Deep Co-segmentation of Primary Objects in Aerial Videos

Jia Li* Pengcheng Yuan Daxin Gu

State Key Laboratory of Virtual Reality Technology and Systems, Beihang University

Yonghong Tian*

School of Electronics Engineering and Computer Science, Peking University

Published in IEEE Multimedia, July. 2018

Dataset

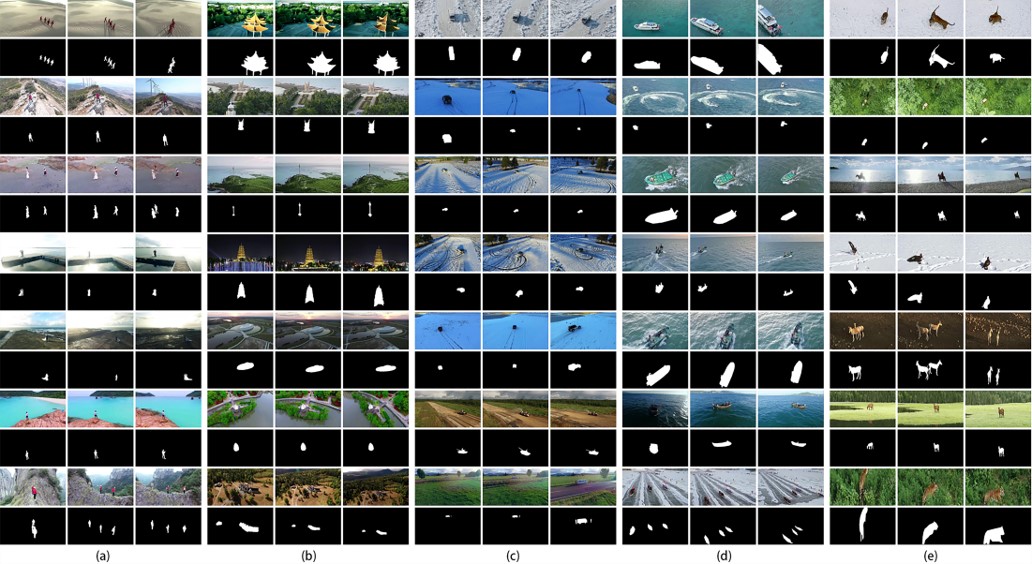

Frames and ground-truth masks from APD. (a) APD-Human (95 videos), (b) APD-Building (121 videos), (c) APD-Vehicle (56 videos), (d) APD-Boat (180 videos) and (e) APD-Other (48 videos).

Approach

The framework of our approach is shown above, which consists of three major stages: 1) hierarchical temporal slicing of aerial videos, 2) mask initialization via video object co-segmentation and 3) mask refinement within neighborhood reversible flows.

Benchmark

Performance benchmark of HDC and state-of-the-art models before being fine-tuned on VOS and APD. The first two models are marked with bold and underline, respectively.

Citation

-

Jia Li*, Pengcheng Yuan, Daxin Gu, Yonghong Tian*. Hierarchical Deep Co-segmentation of Primary Objects in Aerial Videos. IEEE Multimedia Magazine.

- Paper: [arXiv]Resources: [APD Dataset, 6.7G] [HDC-Code, 280.6MB]