Multi-class Part Parsing with Joint Boundary-Semantic Awareness

Yifan Zhao, Jia Li*, Yu Zhang,

State Key Laboratory of Virtual Reality Technology and Systems, Beihang University

Yonghong Tian

School of Electronics Engineering and Computer Science, Peking University

Approach

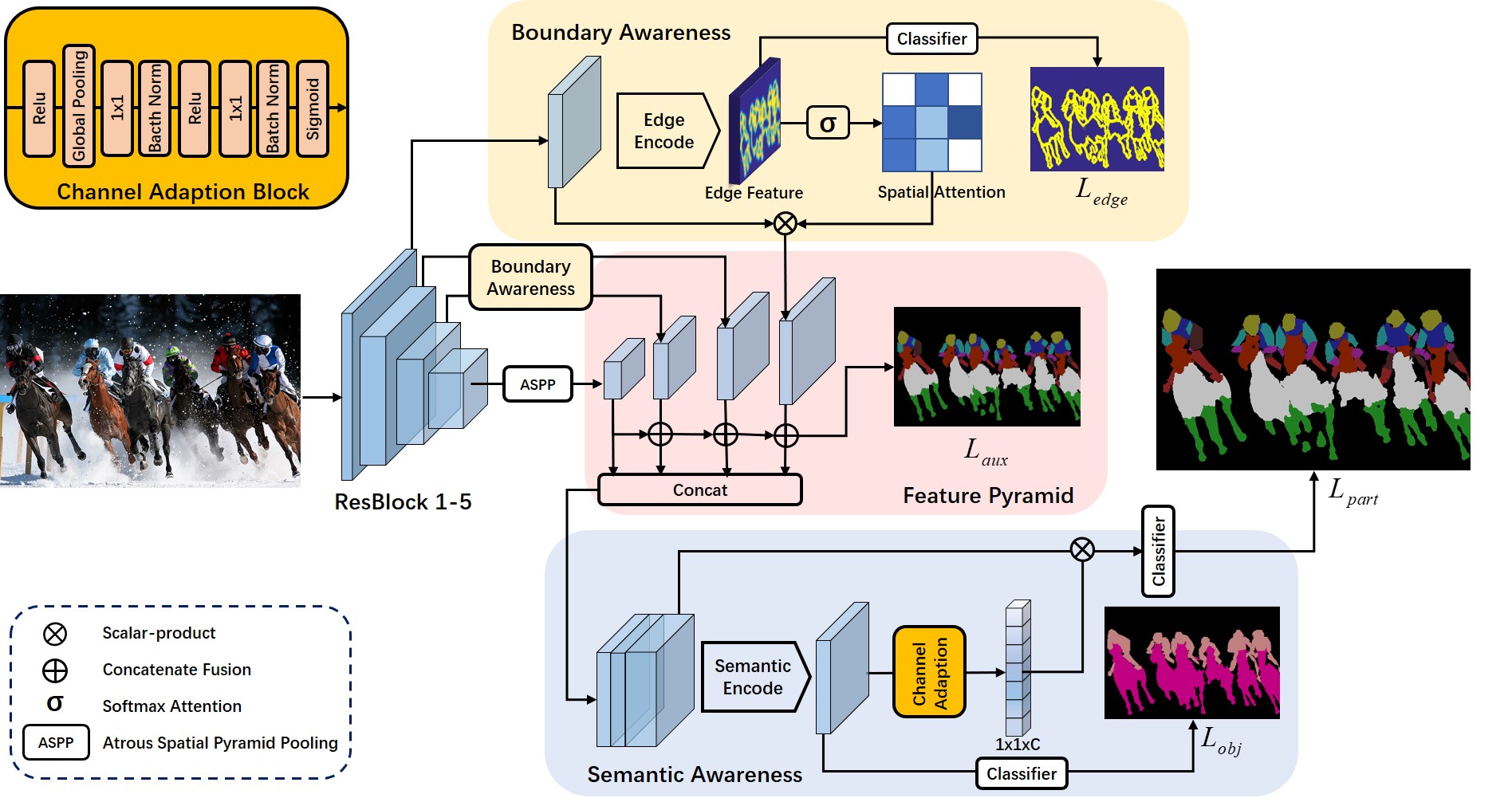

Our joint Boundary-Semantic Awareness Network (BSANet) framework, is mainly composed of a boundary aware spatial selection module and a semantic aware channel selection module. The boundary awareness module aims to aggregate the local features near boundaries in low-level and semantic context in high-level, which is supervised by an edge regression loss. Semantic awareness module aims to use the supervised semantic object context to enhance the expression of class-relevant feature channels

Difference and relations

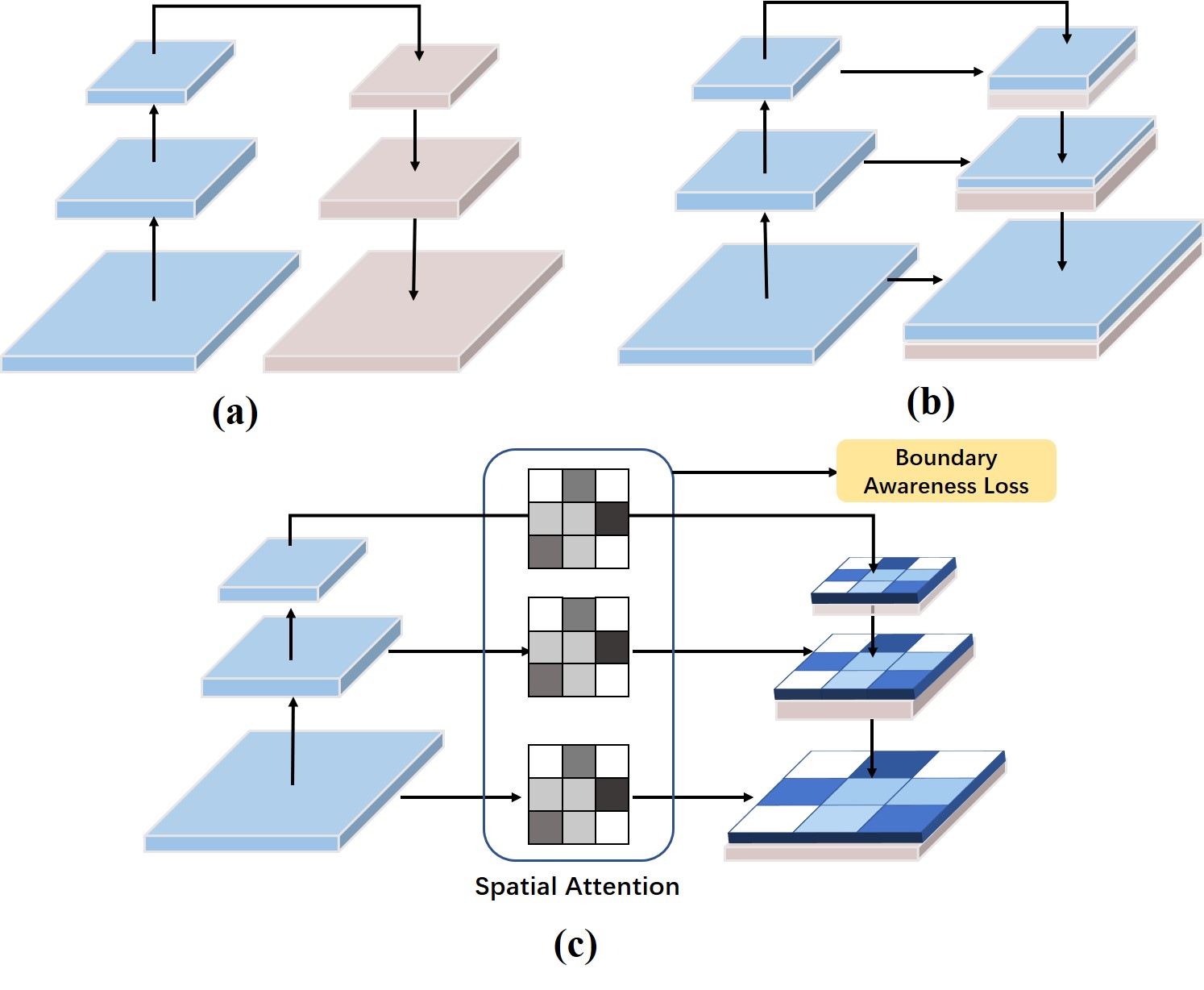

Differences of three pyramid decoders. (a): Top-down pyramid decoder. (b): Top-down pyramid decoder with feature transfer. (c): Spatial aware feature pyramid.

Visualizations

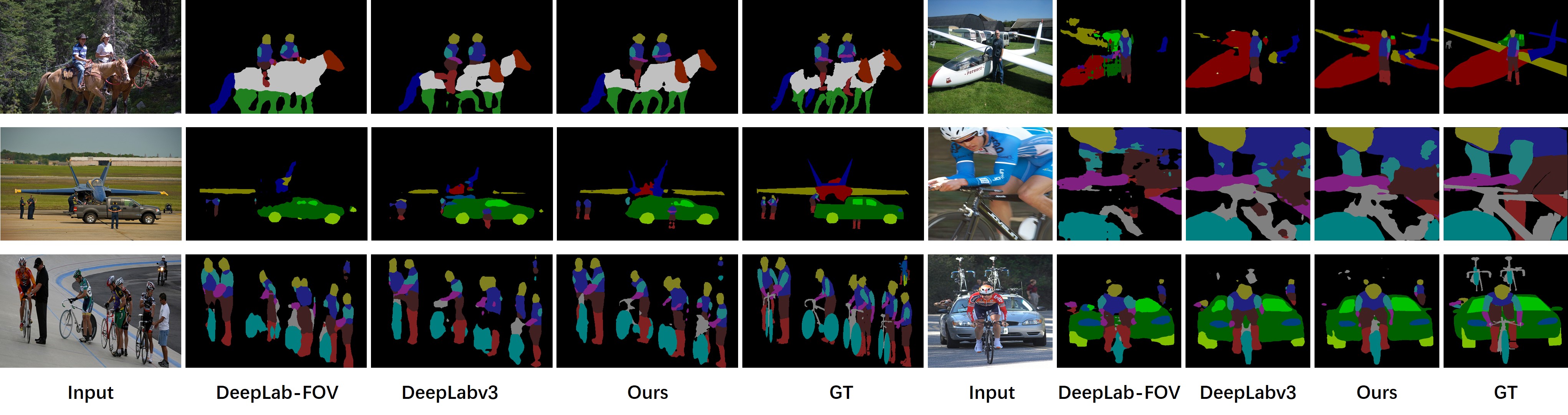

Qualitative comparisons on PASCAL-Part dataset. Our model generates superior results with finer local details and semantic understanding comparing to the-state-of-the-art models.

Multi-class Benchmark

Segmentation Performance of mIoU on PASCAL-Part Benchmark. Avg.: the average per-object-class mIoU. mIoU: per-part class mIoU. *: use pretrained model on MS-COCO dataset.

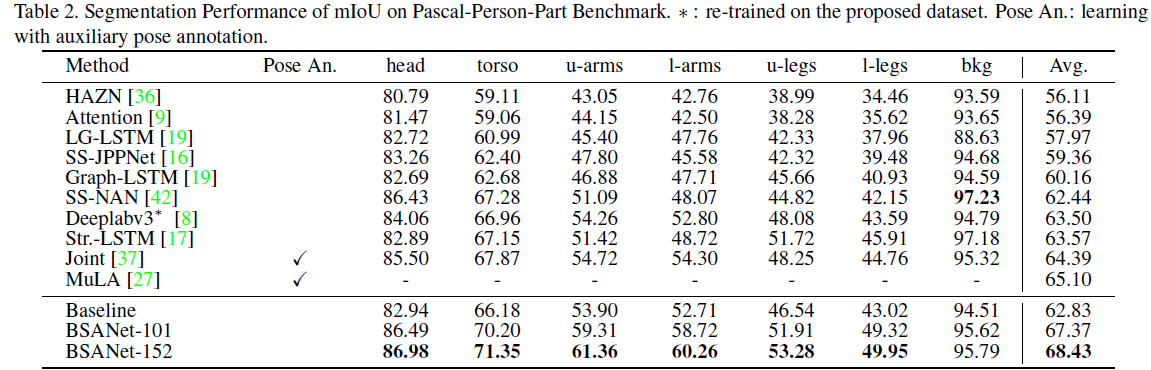

PASCAL-Person Benchmark

Segmentation Performance of mIoU on Pascal-Person-Part Benchmark. *: re-trained on the proposed dataset. Pose An.: learning with auxiliary pose annotation.

Update logs

2019/08: We have updated the modified annotations.

2019/12: We have updated the results of the original version.

2020/01: We have updated the preliminary code, which is reconstructed for easy reading.

2020/06: Update masks and binary edge annotations.

Citation

-

Yifan Zhao, Jia Li*, Yu Zhang*, and Yonghong Tian. Multi-class Part Parsing with Joint Boundary-Semantic Awareness.