Cross-Reference Stitching Quality Assessment for 360° Omnidirectional Images

Jia Li*, Kaiwen Yu, Yifan Zhao, Yu Zhang,

State Key Laboratory of Virtual Reality Technology and Systems, Beihang University

Long Xu

National Astronomical Observatories, Chinese Academy of Sciences

Dataset Corrigendum of ACM MM 2019

In the paper of "Cross-Reference Stitching Quality Assessment for 360° Omnidirectional Images" on ACM MM 2019, the "292 quaternions of fisheye images" should be revised as "292 images as quaternions" in Section 1, Section 3.1, Section 5.1. We apologize for our negligence in the dataset description in our paper. In addition, we also enlarged our dataset with more than 10000+ stitched results and release them now. If any problems, please feel free to contact us.

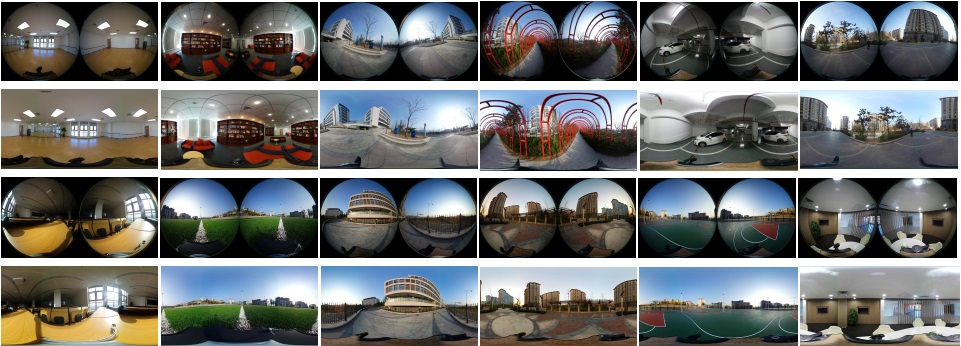

Dataset Visualization

Fisheye and cross-reference groundtruth images in the proposed CROSS datasets. Collected original fisheye images are shown in the first and third rows, while the ground-truth omnidirectional stitching images are shown in the second and forth rows.

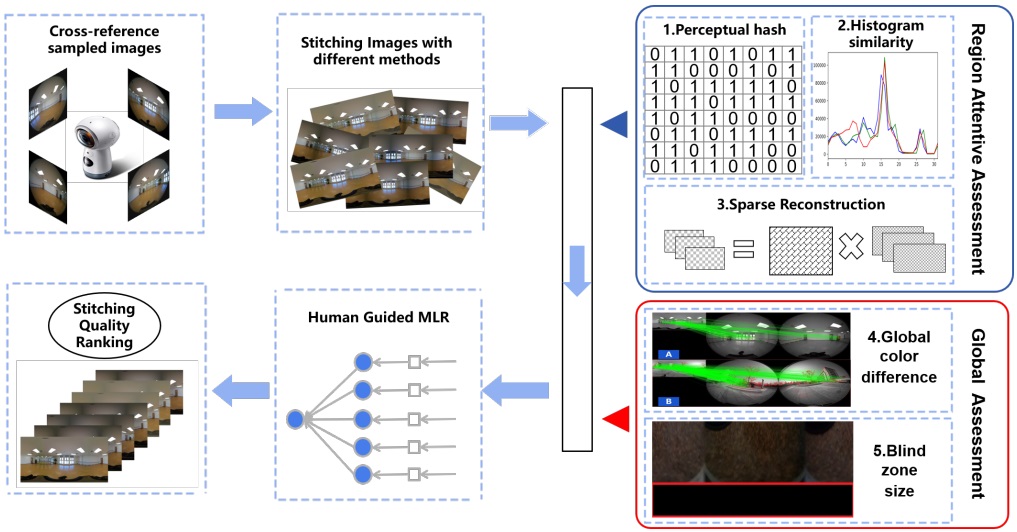

Approach

Overview of OS-IQA framework. The stitched images are evaluated with three local metrics which focuses on the quality of stitching region, and two global metrics to evaluate the environmental immersion. These metrics are learned to fuse by a guided linear classier to match the human subjective evaluations.

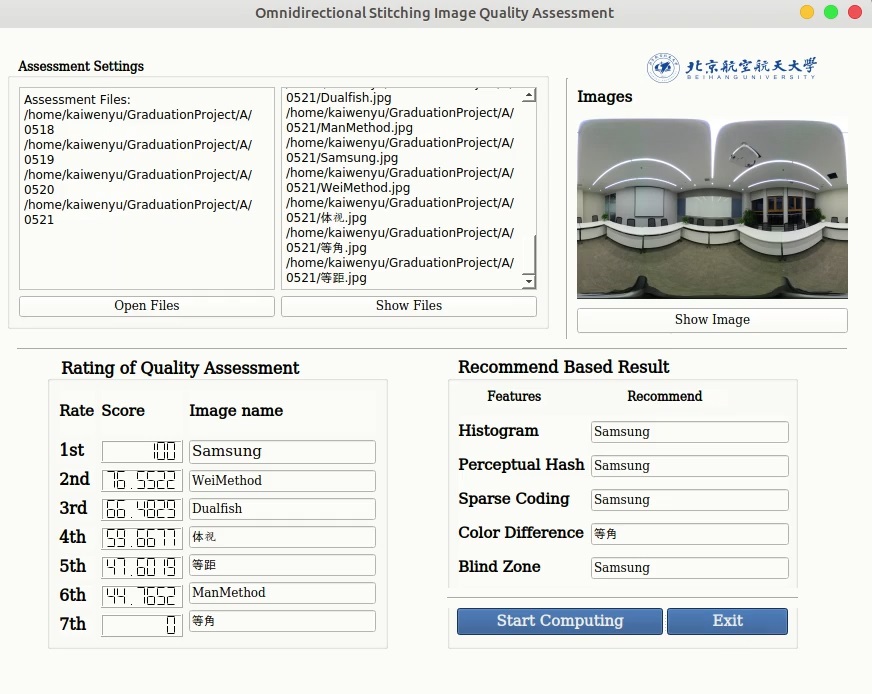

Visualizations Demos

Software of our OS-IQA evaluation tools. The rate score are normalized to 0~1 to get a better view. For usage problems, contact kevinyu@buaa.edu.cn for details.

CROSS Dataset-v1

Fisheye and cross-reference groundtruth images in the proposed CROSS datasets.

CROSS Dataset-v2

Fisheye and cross-reference groundtruth images in the proposed CROSS datasets.

Update logs

2019/12: We have updated the CROSS-V2 dataset.

2020/01: The OS-IQA software is publicly available.

Citation

-

Jia Li*, Kaiwen Yu, Yifan Zhao, Yu Zhang, Long Xu. Cross-Reference Stitching Quality Assessment for 360° Omnidirectional Images. ACM Conference on Multimedia (ACM MM), 2019.

- Paper: [PDF] Slides: [PPT, 26MB]Cross Dataset: [Dataset-v1, Baidu Drive, 6.16GB] [Dataset-v2, Baidu Drive, 28.16GB] [Ranking MOS, beta version]Model: Instruction Demo: [M4V, 3.1MB] Executable Code: [7z, 778MB]