Data

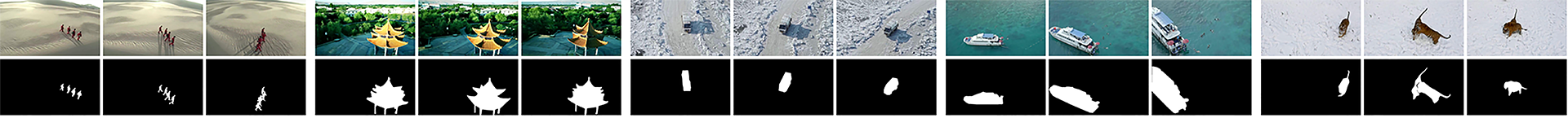

Towards primary object segmentation in aerial videos, we construct a large-scale dataset for model training and benchmarking, denoted as APD.

If you want to learn more about the APD dataset, please read the paper.

[arXiv]

[project]

The following are results of models evaluated on their ability to predict ground truth on APD test set containing 125 aerial videos.

We post the results here.

Citations

These evaluations are released in conjuction with the papers

"Hierarchical Deep Co-segmentation of Primary Objects in Aerial Videos".

So if you use any of the results or data on this page, please cite the following:

@ARTICLE{8543646,

author={J. Li and P. Yuan and D. Gu and Y. Tian},

journal={IEEE MultiMedia},

title={Hierarchical Deep Co-segmentation of Primary Objects in Aerial Videos},

year={2018},

pages={1-1},

keywords={Videos;Avalanche photodiodes;Object segmentation;Task analysis;Drones;Training;Image segmentation},

doi={10.1109/MMUL.2018.2883136},

ISSN={1070-986X}

}

Download

You can download the APD dataset from here.

[APD]

Metrics

mIoU

To assess performance, we rely on the standard Jaccard Index, commonly known as the PASCAL VOC intersection-over-union metric

IoU = TP / (TP+FP+FN) [1], where TP, FP, and FN are the numbers of true positive, false positive, and false negative pixels.

For evaluate the performance of video data, we report mean IoU: mIoU = sum(IoU(frame(i))) / numFrames, where frame(i) means the i th frame,

0 < i < numFrames, numFrames is the total frame number of video.

mWFM

wFM compute the weighted F-beta measure, which was proposed in "How to Evaluate Foreground Maps?" [2],

wFM = (1 + β²) (Precisionw ⋅ Recallw) / β² ⋅ Precisionw ⋅ Recallw,

where Precisionw = TPw / TPw + FPw, Recallw = TPw / TPw + FNw.

For more information about TPw, FPw and FNw, please read the above paper.

For evaluate the performance of video data, we report mean wFM: mWFM = sum(wFM(frame(i))) / numFrames, where frame(i) means the i th frame,

0 < i < numFrames, numFrames is the total frame number of video.

runtime

runtime is the time(seconds) it takes to process a frame.

Results

Usage

Supports sorting or searching to find the data you want.

| name | code | video | deep | mIoU | mWFM | runtime |

|---|---|---|---|---|---|---|

| DSR | matlab | no | no | 0.222 | 0.329 | 4.03 |

| MB+ | matlab | no | no | 0.220 | 0.300 | 0.02 |

| GMR | matlab | no | no | 0.202 | 0.258 | 0.46 |

| SMD | matlab | no | no | 0.294 | 0.365 | 0.89 |

| RBD | matlab | no | no | 0.243 | 0.357 | 0.15 |

| ELE+ | matlab | no | no | 0.371 | 0.417 | 7.80 |

| HDCT | matlab | no | no | 0.221 | 0.396 | 3.35 |

| RFCN | matlab | no | yes | 0.451 | 0.510 | 1.00 |

| DHSNet | matlab | no | yes | 0.493 | 0.581 | 0.03 |

| DSS | matlab | no | yes | 0.400 | 0.517 | 0.82 |

| FSN | matlab | no | yes | 0.443 | 0.505 | 0.08 |

| DCL | matlab | no | yes | 0.444 | 0.515 | 0.47 |

| SSA | matlab | yes | yes | 0.333 | 0.414 | 6.76 |

| FST | matlab | yes | yes | 0.319 | 0.382 | 4.52 |

| MSG | matlab | yes | yes | 0.153 | 0.182 | 14.3 |

| RMC | matlab | yes | yes | 0.205 | 0.233 | 7.42 |

| NRF | matlab | yes | yes | 0.496 | 0.551 | 0.18 |

| HDC | matlab | yes | yes | 0.582 | 0.649 | 0.73 |

| CB | matlab | no | no | 0.108 | 0.166 | -- |

| BSCA | matlab | no | no | 0.137 | 0.217 | -- |

| ELD | matlab | no | yes | 0.285 | 0.362 | -- |

| LEGS | matlab | no | yes | 0.190 | 0.249 | -- |

| MCDL | matlab | no | yes | 0.255 | 0.133 | -- |

| HS | matlab | no | no | 0.174 | 0.268 | -- |

| GP | matlab | no | no | 0.100 | 0.164 | -- |

References

[1]. Mark Everingham, S. M. Ali Eslami, Luc J. Van Gool, Christopher K. I. Williams, John M. Winn, Andrew Zisserman,

"The Pascal Visual Object Classes Challenge: A Retrospective," International Journal of Computer Vision, vol. 111, no. 1, 2015, pp. 98-136.

[2]. Ran Margolin, Lihi Zelnik-Manor, Ayellet Tal,

"How to Evaluate Foreground Maps," Computer Vision and Pattern Recognition, 2014, pp. 248-255.